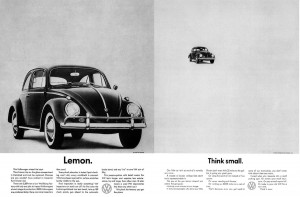

In the 1950’s and 60’s it was “Think small” ad for Volksgen’s Beatle car that marked a radical shift in an era that was dominated by large cars in the US. For anyone who studied advertising or works in the industry would recall this iconic add – considered as a classic in the field in several ways — but for the most part it created a new way of consumer thinking for the advantages of small cars versus big cars.

In the 1950’s and 60’s it was “Think small” ad for Volksgen’s Beatle car that marked a radical shift in an era that was dominated by large cars in the US. For anyone who studied advertising or works in the industry would recall this iconic add – considered as a classic in the field in several ways — but for the most part it created a new way of consumer thinking for the advantages of small cars versus big cars.

Today for someone in the field of data science, “big data” is the buzzword. More and more jobs are emerging in this area. Every day on my LinkedIn, I see several opportunities in this area. So I see the world of data science also facing a similar divide – big data versus small data. While we don’t hear much about small data as much as we hear about the term “big data” as it is more fancy, sexy, and requires highly sophisticated programming skills to model the problem, there are distinct problems and areas of application where each has its own place and utility.

Big data and some of its applications: Big data is associated with machine learning and applying algorithms to extract data from the web to search for pattern and trends based on millions of records. For instance, in text based analysis this would mean web crawling through millions of newspaper articles and editorials through which a computer can identify specific articles that a researcher or analyst is looking for. For a spatial statistician, this could mean employing computer algorithm to extract location information from millions of records about specific events of interest. The world of big data analytics is dominated by computer scientists, statisticians, political scientists interested in studying issues pertaining to conflicts, or public opinion. It is also dominated by companies and industries that are looking to capture consumer behavior and trends. So companies like Amazon and Google can model consumer pattern and forecast demand or decision-making.

Small data and its application: We don’t hear much of the term “small data”, but as a public health and policy professional, I see a lot of problems that need to be addressed in the field of public health, epidemiology, census that require one to deal with counts and small numbers that can be modeled correctly and be used to make valid inferences. This requires domain knowledge of distinct set of statistical models and tools. Organizations where knowledge of small area analysis and estimation would be helpful would be CDC, Census Bureau, and community level program planning and evaluation.

a) Survey methodology: For large scale health and population surveys implemented in developing countries, the sample is representative of the population at a larger regional scale, but often not for small geographic scale. In such a situation small area estimation techniques or interpolation is of interest to make inference about a geographic unit where sampling was not done on specific health outcome.

b) Sentinal Surveillance: This involves surveillance at a specific site or a location for detecting disease outbreaks or new cases of specific diseases. According to WHO sentinel surveillance is appropriate to gather high quality data when passive surveillance system ( generally based on data reported by health workers and health facilities) is not adequate to identify causal factors for certain diseases. However, because data is monitored at specific sites, hospitals, or locations, it may not be appropriate for detecting cases outside of the selected sites.

c) Community based program planning: In case of community and program planning, an application area would be improving a health intervention at a specific site and location. For instance, USAID allocates funds for HIV testing and treatment at specific sites and in several countries. Hence it might be interested in knowing which clinics are doing better in comparison to other clinics. According to the PEPFAR Annual report to the Congress, there exists a wide variation in disease burden and HIV risk at the sub-national level and sub-populations level. Hence, knowledge about distribution of cases around specific sites, uptake in the service utilization can help improve programs. Similarly, AidData, a collaboration between three universities, to track where aid money is going and in which programs by country and by year and based on the type of the project, works in the area of geospatial impact evaluation. Hence, it borrows traditional statistical methods such as difference-in difference and propensity score matching and other methods, but also takes into account site location of the project. It identifies sites where World Bank did not implement a project, thus acts as a control site. By accounting for location of the project implementation site, it considers heterogeneity in program outcomes while conducting impact assessments.

Economics as a discipline has always been demarcated between marco and micro economics. Is it time we divide statistics also as a discipline between macro and micro?